Lossy data compression methods have captured my attention and imagination from orthogonal sources (day job and Victus Media)

A Curious Coincidence: Photons, Neural Compression, and Perception

Long have I wondered how our minds perform the enormous processing task of transforming incident light into a 3 dimensional reality, while triggering memory and understanding. After a great deal of reading and many years working on sensor simulations I have only a laymen's understanding of the process. Join me in a journey that crosses thousands of light years to illuminate the magic of our minds.

Photons from Distant Galaxies Become Information to Our Minds

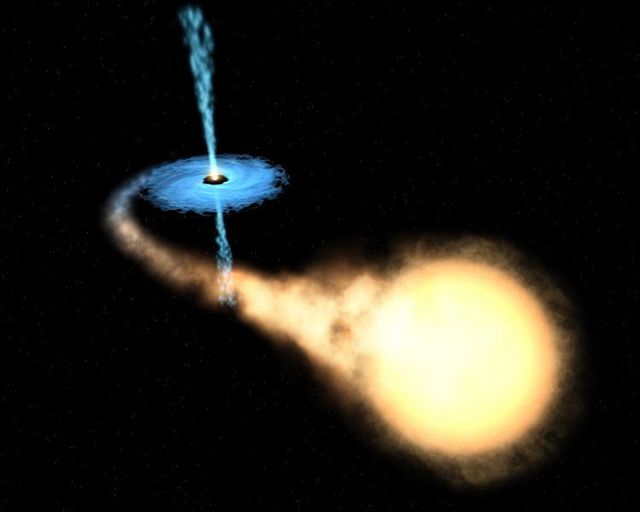

Let's begin by tracing photons from a distant quasar* at the edge of the universe all the way to a human observer looking at telescope data. The photons first leave their energetic home and traverse space and time over billions of years. Their course may bend many times as the photons near other galaxies and eventually pass close to our own sun and encounter an enormous aperture. Let's gloss over the complex signal processing that transforms data and imagery, and instead follow the newly made light from a human readable display. The light finds it's way to curious human eyes. For the next step in the information chain, I'll borrow from an earlier post on digital and optical Images:

Our optical system quantizes measured photons in a similar manner to a digital focal plane. While rods give us black and white vision in low light conditions, our cones spatial orientation uniquely captures incident photons. While the majority are sensitive to red light (64%), some to green (32%), and only a tiny minority to blue light (2%). The proteins in the cones help trigger a nerve response which is channeled down the optical nerves to the part of brains that transforms these signals into three dimensional representations of our surroundings.

Local light that comes from sources closer to home, like this blog post or your display, are raw data that your senses use to compose a conscious image of your surroundings. But how do these incident photons become a human concept? One vital piece of the puzzle is massive data compression.

Biological Optical Data Compression

The investigators calculate that the human retina can transmit data at roughly 10 million bits per second.. That data rate matches the older Ethernet cable speed of 10mbits/second.

What data rate is available at the visual cortex?

article in scientific american (behind a paywall)

Visual information, for instance, degrades significantly as it passes from the eye to the visual cortex.

Of the virtually unlimited information available in the world around us, the equivalent of 10 billion bits per second arrives on the retina at the back of the eye. Because the optic nerve attached to the retina has only a million output connections, just six million bits per second can leave the retina, and only 10,000 bits per second make it to the visual cortex.

After further processing, visual information feeds into the brain regions responsible for forming our conscious perception.Surprisingly, the amount of information constituting that conscious perception is less than 100 bits per second. Such a thin stream of data probably could not produce a perception if that were all the brain took into account; the intrinsic activity must playa role.

Yet another indication of the brain's intrinsic processing power comes from counting the number of synapses, the contact points between neurons. In the visual cortex, the number of synapses devoted to incoming visual information is less than 10 percent of those present. Thus, the vast majority must represent internal connections among neurons in that brain region.

So our optical process distills billions of potential bits of light into 100bits per second. Although the compression is lossy, it's good enough for us to interact with our surroundings and respond to motion rapidly (reflexes & frontal cortex). My hypothesis is that our mind continually estimates our surroundings and updates that state with a miniscule fraction of the raw input data (the ultimate change detection). I can only guess that massively parallel pattern matching is taking place to compress vital data differences at each moment. This compressed collection of neural signals updates our perception of reality. We continually track and estimate the world around us.

Notes:

*Quasar: there is now a scientific consensus that a quasar is a compact region in the centre of a massive galaxy surrounding the central supermassive black hole. (from wikipedia link at the start of the post)

^Visualization of data: Purely visual representation of distant solar bodies has been a challenge to artists and masters of scale (exaggerating structures for display), and this is how the non-experts experience the data