Update: The greatest part about being obviously and publicly wrong is that smart folks are generous about pointing out the truth atrocities commited. Thanks to igouy for corrections. My brain mangled the domain into a source and OS, and that's been corrected below.

One of the biggest gains that can be achieved with any specific implementation, independent of the language(s) it is written in, is having experts spend time profiling and optimizing system performance (don't jump to conclusions). That said there are broad language implementation based comparisons that can be made between standard benchmarks.

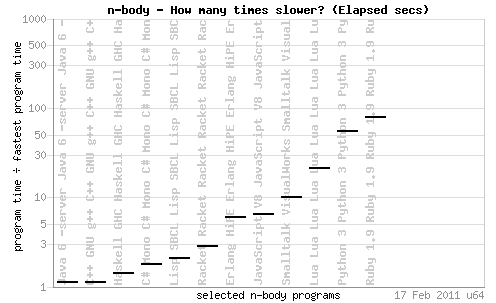

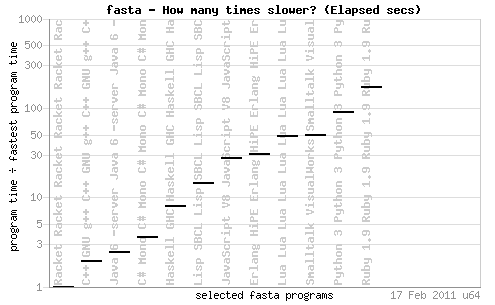

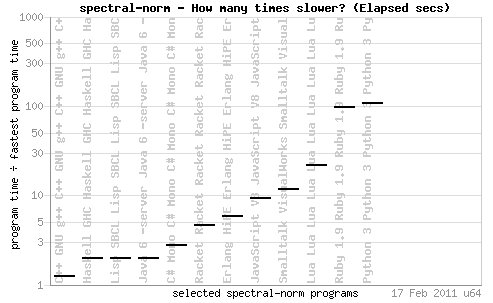

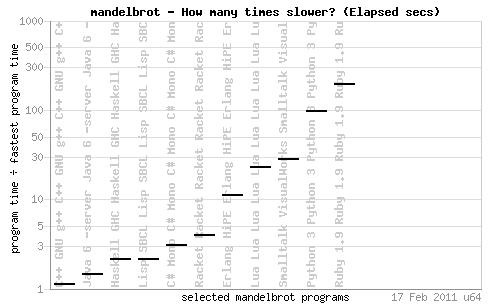

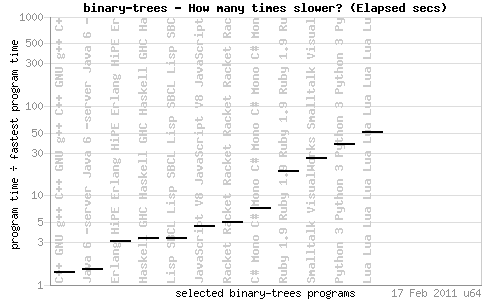

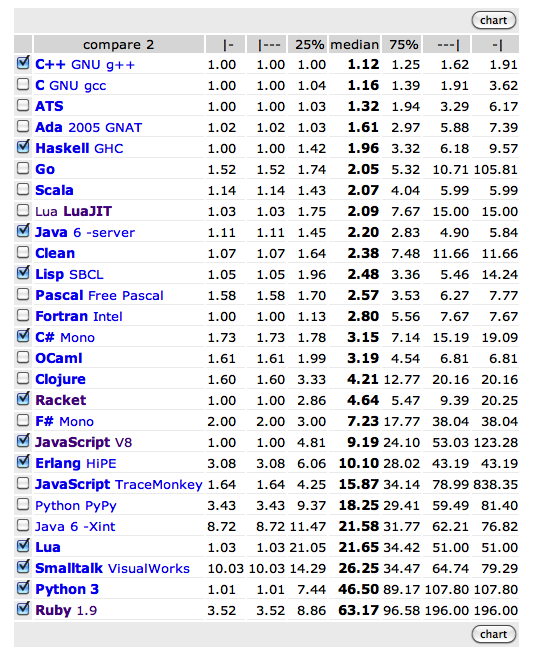

I spent some time this morning reading over variations in dynamic language implementations (mainly Ruby, Lua, Javascript) by digging into shootouts (The Computer Language Benchmarks Game) (debian ubuntu linux). I wanted to get a feel for execution times between languages overall (vague) and for specific algorithms. You can think of the latest GCC compiler of c/c++ as the gold standard of execution speed for benchmarks, and they help frame other run times (ie just as fast, almost as fast, way freakin' slower).

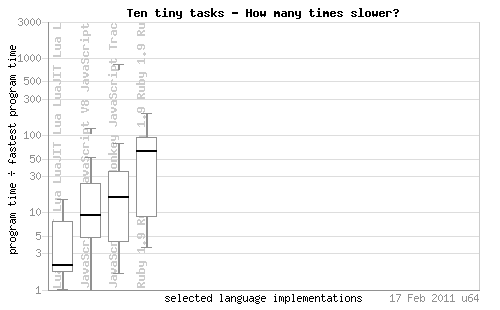

The single core results show various speeds as a multiple of the fastest run times:

1 = fastest 63.17 = ruby :(

Fortunately for my own needs the types of optimization I have to worry about at home are mostly data shuffling and not very sensitive to computation times. At work we have mixed sensitivity to processing speed and memory handling since most of our data is localized. For a handful of specific benchmarks check the end of this post.

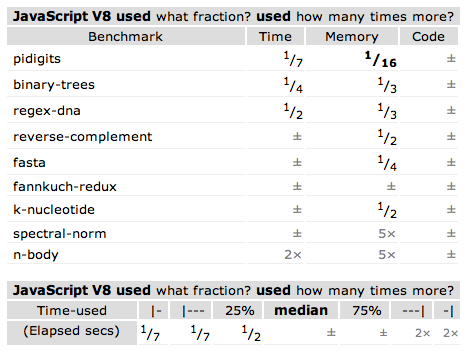

One of the benchmark comparisons that piqued my interest shed further light on the question, who is the fastest Javascript interpreter V8 or TraceMonkey? V8 edges TraceMonkey out in most tests but is twice as slow for the n-body benchmark (gravity is a harsh mistress).

And a box chart with LuaJIT and Ruby added:

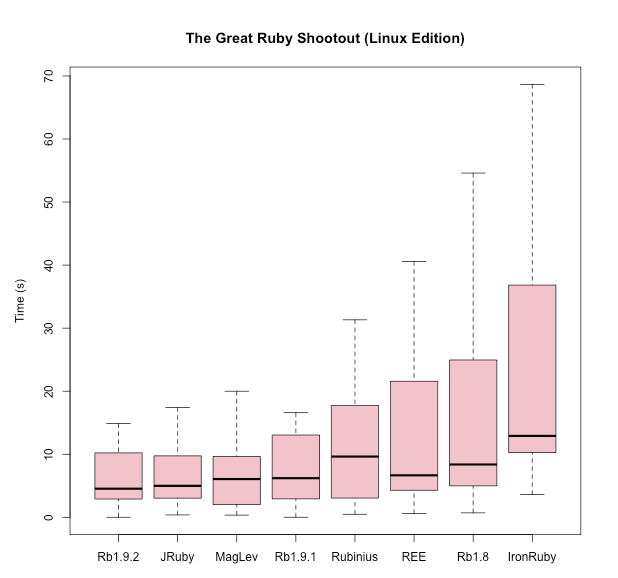

I moved on to more specific Ruby comparisons next to see how various implementations were coming along with respect to completeness and performance. The Great Ruby Shootout captures a look at execution between 8 Ruby implementations:

- Ruby 1.8.7 p299

- Ruby 1.9.1 p378

- Ruby 1.9.2 RC2

- IronRuby 1.0 (Mono 2.4.4)

- JRuby 1.5.1 (Java HotSpot(TM) 64-Bit Server VM 1.6.0_20)

- MagLev (rev 23832)

- Ruby Enterprise Edition 2010.02

- Rubinius 1.0.1

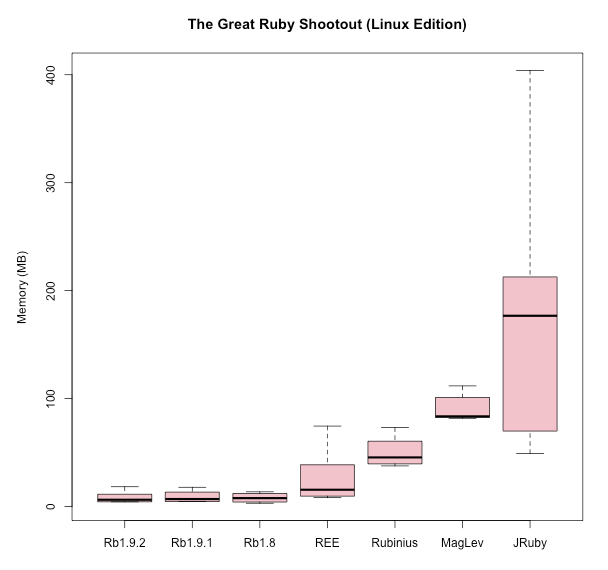

A processing benchmark is more meaningful when coupled with a memory comparison*. As you can see from the below chart JRuby is the heaviest hitter when it comes to memory with the interpreters (1.8.7, 1.9.1 & 1.9.2) being rather lean.

-------------------------------------------------------------------

Notes:

* = Thanks to commenter ugouy for pointing out that memory utilization is easily pulled out of the the benchmarks game: ie the mandelbrot benchmark's memory usage.

Additional reference: A handful of specific benchmarks from http://shootout.alioth.debian.org

To get specific times and information about the benchmark click through the image.