“Our graphs are based on starting with chaos — meaning we have a blast of news, and we say to our algorithms, find some order in this. We're creating these from scratch.”

Jim Andrus

It is with some regret that I must bring to a close this white knuckled thrill ride and comp sci overview (mutually exclusive). All good things must come to an end, and weaving these posts together was certainly a good exercise for me. There is a bastion of material to choose from, limiting my ability to properly delve into all of the interesting bits. Despite this I'm satisfied with the compressed random draw that is the result of this review. Thanks for everyone who stopped in, commented or planted the seeds for this blog series. One last time, the table of contents:

- Intro and Big O

- Object Oriented Programming

- Data Structures: arrays, lists, trees, hash tables

- Algorithms (searches, sorts, maths!)

- Graphs, Networks, and Operating Systems

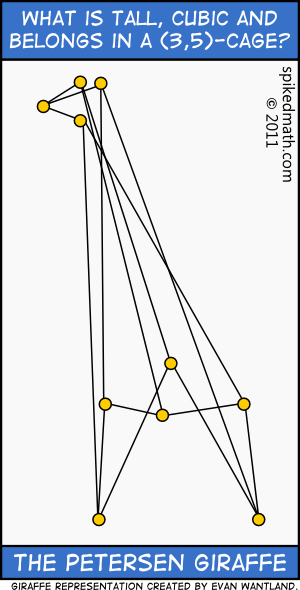

Let's jump right in with a presentation which Chris Dixon and Matt Gattis of Hunch gave to an eager audience at Google in mid February. In the slideshow the importance of graphs is made clear through several hugely successful applications of graph theory on real world problems. The metaphor from clients and connections to nodes and vertices is the magic of our time.

[scribd id=49061218 key=key-1u8ymkf45zipfepbxx09 mode=slideshow]

Graph Algorithms

Dijkstra's Algorithm

One of the properties of shortest paths through directed graphs is that any shortest path is composed of shortest paths to all nodes along it's route (optimal substructure). If there was a shorter path to an intermediary node, you'd select that one instead for the current shortest path.

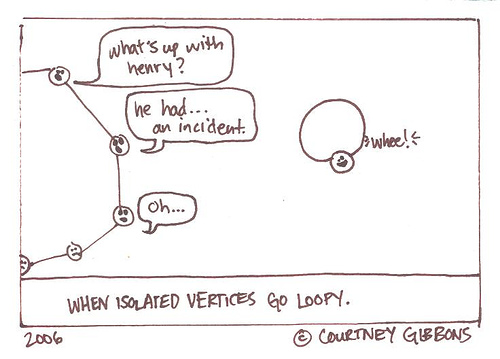

Dijkstra's algorithm is a search algorithm which finds the shortest path between an origin node and all other connected nodes (isolated nodes miss the party). Begin by setting all path lengths from the origin to all nodes to infinity, and mark them as unvisited. Set the current node to the origin.

Iteration:

Identify the distance to all adjacent nodes, adding their path lengths to the length to get to this node. Store and update any improvements in current path lengths. Mark the current node as visited. Choose a shortest path to an adjacent node as the next node to process. If there are multiple nodes with equal length, selecting any will suffice.

Each node will be visited only once and once a shortest path is calculated for all reachable nodes you're done.

[scribd id=50977483 key=key-naslhvzhgj81iotm6bd mode=slideshow]

(source)

It's hard to top the value of free university lectures when it comes to learning. This lecture by Erik Demaine is well developed and easy to follow (thanks Erik and the unknown videographer). I've included Erik's slides below.

[iframe http://videolectures.net/mit6046jf05_demaine_lec17/video/1/slides/ 550 400]

Related implementation details for Dijkstra's algorithm:

The common implementation based on a min-priority queue implemented by a Fibonacci heap and running in O(|E| + |V| log |V|) is due to (Fredman & Tarjan 1984). This is asymptotically the fastest known single-source shortest-path algorithm for arbitrary directed graphs with unbounded nonnegative weights

(source)

A* algorithm

An alternative search or shortest path approach is the A* Algorithm. A* outperforms Dijkstra's algorithm by using heuristics.

As A* traverses the graph, it follows a path of the lowest known cost, keeping a sorted priority queue of alternate path segments along the way. If, at any point, a segment of the path being traversed has a higher cost than another encountered path segment, it abandons the higher-cost path segment and traverses the lower-cost path segment instead. This process continues until the goal is reached.

(source)

A* for beginners is a good introduction to the algorithm.

<a href="#concurrency" id="concurrency"

Concurrency, Threads & Processes

</a>

"Once you start down the path of lock management the non-deterministic character of the system can quickly overwhelm your brain"

Mark Ramm

(I linked to Mr. Ramm's brief and effective introduction to threads and processes).

One of the simplest ways to scale an algorithm to many processes is horizontally. You batch concurrent and completely isolated runs and then tally their results with post processing. But there are times when running a single process with many threads will yield irresistible optimization returns. Recognizing when to walk the path of concurrent threads versus alternative options is a signal of expertise. I discussed an implementation of green threads in ruby over a couple of posts last year (1, 2), and it saved us some time waiting on remote api calls. It's worth noting that the Ruby MRI suffers from the same GIL that's mentioned in the slides on Python below.

It's beyond the scope of this post to go into great details about how operating systems handle the memory space (swapping, caching) and balance processor load (tiny red men). What I'm interested in reviewing and learning more about is how multi/thread/process applications can best be designed to take advantage of increasingly concurrent hardware to solve computationally expensive problems. First let's begin by covering definitions, and then wrap up this series with a couple slideshows which show all my baby pictures discuss concurrent processing.

Defintiions

What's a process?

Processes describe active applications. A process encapsulates the execution code and memory for a given program, and may share state information with other processes through communication channels (sockets).

What's a thread?

An executable series of code, but that are contained in processes and often share memory and other resources between each other. Threads share a processes instructions and variable states.

In order for data to be correctly manipulated, threads will often need to rendezvous in time in order to process the data in the correct order. Threads may also require mutually-exclusive operations (often implemented using semaphores) in order to prevent common data from being simultaneously modified, or read while in the process of being modified. Careless use of such primitives can lead to deadlocks.

(source)

Deadlocks

Deadlocks occur when multiple competing actions wait for each other to finish. The gruesome cousin of gridlock.

(source)

Race conditions

Race conditions arise in software when separate processes or threads of execution depend on some shared state. Operations upon shared states are critical sections that must be mutually exclusive in order to avoid harmful collision between processes or threads that share those states.

(source)

Semaphores

A semaphore is an abstract data type that brokers access to shared resources. Simple binary semaphores are either locked or unlocked. Counting semaphores keep a tally of available resources and buffer currently unavailable resource access in a queue. Really good managers act as semaphores for their teams, buffering upper management demands :).

(source)

An analogous structure to counting semaphores are shared pointers. Although they aren't directly responsible for threading, shared pointers keep a tally of active copies, and are able to free the memory of an object when it's last copy goes out of scope.

Mutex or Mutually exclusive operations

A mutually exclusive (or mutex) lock acts as a protective barrier around a resource. A mutex is a type of semaphore that grants access to only one thread at a time. If a mutex is in use and another thread tries to acquire it, that thread blocks until the mutex is released by its original holder. If multiple threads compete for the same mutex, only one at a time is allowed access to it.

(source)

Atomic Operations

Atomic operations are a simple form of synchronization that work on simple data types. The advantage of atomic operations is that they do not block competing threads. For simple operations, such as incrementing a counter variable, this can lead to much better performance than taking a lock.

(source)

Additional references on locks, mutexes, semaphores and monitors:

Although the following set of slides is Python specific, it reviews many of the core features of multiprocessing and multithreading.

I'll leave off with a set of slides which goes into further detail about multithreading and the global interpreter lock in Python

Uh oh, I forgot Networks. Maybe nobody will notice.